Introduction to Tinfoil

AI is everywhere

AI is permeating every aspect of our personal and work lives. If you are a developer, there is a chance that you are using AI assistants to help you code. If you are a radiologist, you are probably using image recognition pipelines to help you diagnose your patients. If you are using a messaging app or any social network, some level of moderation might be happening using large language models. And if you are reading this, you might even be building AI applications yourself! As AI becomes increasingly ubiquitous, ensuring the privacy and security of the sensitive data we share with these systems is more critical than ever.

The Cloud Dependency Challenge

All AI-powered applications require significant processing power, often too much to run locally on the end-user's device. Instead, they send requests to servers that run the latest — and largest — AI models on specialized GPUs. In this way, every piece of software is becoming distributed across third-party servers and services. Because this is the only way to integrate powerful AI models into products, more private data than ever is now being sent to these third-party servers.

The Trust and Privacy Dilemma

Unfortunately, this means that users need to trust a whole chain of third parties: the SaaS providers, the company that provides the AI model as-a-service (e.g., OpenAI), and the cloud provider (e.g., Azure). This opens up the door for privacy violations and data breaches at an unprecedented scale. Currently, there's no way for end users to verify these parties are keeping their promises to handle users' data properly. Moreover, there's no way to verify they aren't collecting, training on, or selling private data to interested parties.

The Enterprise Privacy Challenge

For most companies, providing strong privacy guarantees for their users is extremely difficult. Even if you want to protect your customers' data, you're forced to trust a chain of third-party API providers and cloud platforms, and then ask your own users to trust you in turn. In practice, this means you have to assure your customers that you won't mishandle their data—but they have no way to verify this for themselves.

Technical Enforcement: The Gold Standard

A few privacy-focused companies with vast resources, like Apple, have managed to build special infrastructure that enforces technical barriers — sometimes called "technical enforcement" or "technical impossibility" — which prevent even themselves from accessing user data, even if compelled by a court order. This is the core idea behind Apple's Private Cloud Compute: not even Apple can access your private data, by design.

The Privacy Gap

But for everyone else, there are currently no easy ways to offer this level of privacy. As a result, people and companies are reluctant to use products that rely on AI-as-a-service. This reluctance is understandable since many companies frequently violate their privacy policies and are constantly vulnerable to data breaches.

Our mission: Bridge the Gap

At Tinfoil, we are building something different: a platform for AI with verifiable privacy guarantees. The way we see it, AI is becoming an extension of local software that used to have a physical security boundary. With Tinfoil, we are extending these same physical security boundaries to the cloud, thanks to secure hardware enclaves. This makes it possible to run AI-powered applications in the cloud with security guarantees similar to on-prem solutions.

What makes Tinfoil different?

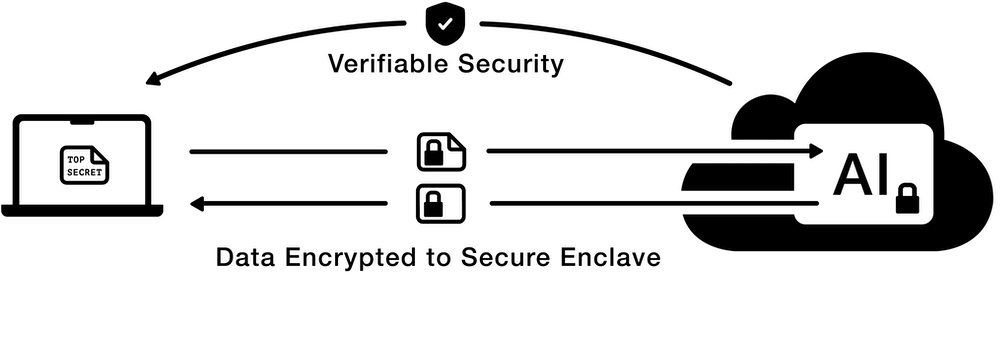

In traditional cloud computing, isolation is one directional and hierarchical. There are no verifiable mechanisms to isolate private user data from software managed by the cloud or the service provider. At Tinfoil, we leverage secure enclaves and confidential computing — a series of hardware mechanisms present in the latest server-grade CPUs and GPUs — to isolate sensitive workloads at the hardware level. These claims can be automatically verified on every connection via remote attestation. This ensures that users' private data is never accessible to any other software running on the same machine or third party, and that trust is replaced with verifiable security. This provides the highest level of security currently available in cloud computing.

The Tinfoil guarantee:

No access to customers' private data. Not for you, not for us, not for the cloud provider, not for third parties. All supported by verifiable security claims users can check for themselves. Everything remains encrypted; the device’s security boundary is extended to include only the hardware enclave.

The Future of AI is Private

As AI continues to transform every industry — from software development to social media — the need for privacy has never been more critical. Join us in building a future where privacy and AI innovation go hand in hand.

Further reading

To understand how we've built Tinfoil to have verifiable privacy, you can read our technical overview of Tinfoil enclaves. You can also read how we built trust in our platform by using a combination of open source code, automated builds, transparency logs, and remote attestation.

Subscribe for Updates

Stay up to date with our latest blog posts and announcements.