Publish, Audit, Attest: How Tinfoil Builds Trust

Introduction

At Tinfoil, we are building infrastructure to help you deploy AI workloads and applications inside isolated, confidential computing environments. But in a world of frequent data breaches and privacy violations, how can users actually trust that their sensitive data will be protected?

The status quo

The reality is that traditional cloud deployments rely entirely on trust. Companies make promises about data privacy in their terms of service, but these "pinky promises" do nothing to prevent data leaks, breaches, or blatant privacy violations by the very same companies claiming to protect your data.1 The scale of this problem is huge. A 2024 survey of over 1,000 data protection professionals found that 74% believe authorities would find "relevant violations" if they were to inspect an average company.2

Our approach

Tinfoil is built differently. Rather than asking users to blindly trust us, we've created a system where users can verify for themselves that their data is truly protected. In this post, we'll explain exactly how we do this through a combination of open source code, automated builds, transparency logs, and hardware attestation.

Why trust is broken today

Most cloud providers still ask you to accept policy promises instead of offering technical proof. Insider access, opaque build pipelines, and complex supply chains make it hard—often impossible—for users to know what code actually runs or how their data is handled in practice.

How Tinfoil changes the model

We replace blind trust with verifiable guarantees: public code, automated builds, transparency logs, and hardware attestation that clients verify automatically. The next section walks through each step of this verification flow.

How Tinfoil builds trust with provable security

At Tinfoil, we want end-users to be able to verify for themselves that an AI service running with Tinfoil can be trusted with their private data. And doing so without having to trust Tinfoil or the service provider that is using our platform.

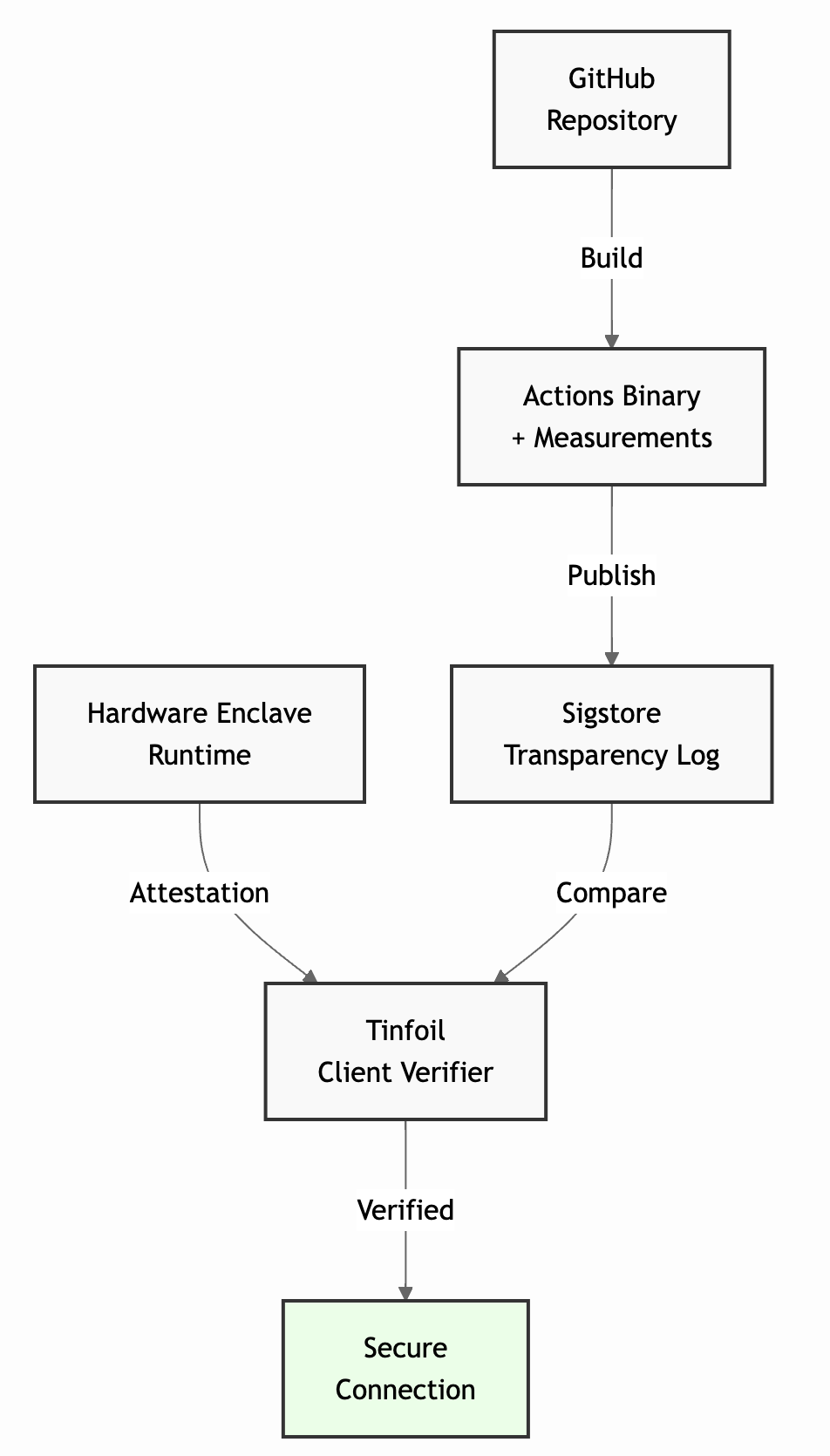

To make this possible, we have built a system that combines:

- code transparency with public auditability,

- automated build,

- versioning on a public transparency log, and

- automated code attestation.

These steps are illustrated in Figure 1. Combined, these elements ensure that the code running inside the Tinfoil enclave is trustworthy; and, more importantly, that end-users can easily and automatically verify this as being the case. For this blog post, we will assume that not only the Tinfoil execution environment, but also all the code running inside the enclave is public. Sandboxing techniques can be used to enforce similar confidentiality guarantees with applications that use private codebases, as long as the integrity of the Tinfoil environment is still verifiable.

Figure 1: Overview of the steps involved in verifying the Tinfoil environment.

Step 1: Code Transparency and Public Auditing

All the code composing the Tinfoil environment is published on our public GitHub. This is crucial in order to build trust in our infrastructure. Code can be audited by the security community and inspected for potential backdoors or vulnerabilities. We make sure the code is simple enough to audit easily, building on top of reputable open source technologies and making a significant effort to minimize codebase complexity. All the enclave code fits into a handful of files, all written in Go, and we went through great lengths to ensure everything is well documented for easy auditing. It can even fit in the context window of a standard LLM!

Step 2: Automated Build

In order for auditing to be useful, we need to make sure the binaries we are distributing correspond to the code we've published. To this effect, all code is automatically compiled by GitHub Actions to generate the corresponding binaries. Additionally, we are actively working on removing this trust assumptions by developing reproducible builds, which would make it possible to verify this compilation step asynchronously.

Step 3: Versioning on a Public Transparency Log

The application code is published on GitHub and automatically compiled to generate a binary. When the binary is compiled, cryptographic measurements are generated to create an artifact that is published onto an immutable transparency log managed by Sigstore. The log entry is signed by the GitHub action worker and also contains the reference to the original repo, the commit, and the toolchain used for the build. Transparency logs have been used for a long time to enforce public auditability of Internet certificates (i.e., SSL/TLS certificates).3 With Tinfoil, we use them as an "append-only" data structure to prevent us from mounting supply-chain attacks and tamper with the code we ship. Since we use a transparency log, auditing can happen asynchronously as code, binary, and corresponding measurements will always be accessible.

Verifying Sigstore entries

We built a client verifier, a public piece of code that runs client-side, either in the user's browser or inside the client application. It is able to automatically retrieve a log entry from Sigstore and compare it to the attestation report from the enclave. This ensures the entry is correct, generated by an independent GitHub action worker, is not tampered with, and corresponds to the publicly available code on the official Tinfoil GitHub repository.

Step 4: Attestation

To ensure we cannot tamper with the final binary during deployment, we need a mechanism to verify that the client is connected to a trustworthy enclave that is running the code published on Sigstore. When a user establishes a secure connection with the enclave, the enclave presents the user with an attestation proof. An attestation proof is a series of cryptographic statements, all assembled and signed by a remote attestation mechanism implemented in hardware. This proof contains information about the hardware, how it was configured to enforce isolation, and a measurement (e.g., a hash) of the software it is running. This measurement is obtained by the hardware during enclave setup by hashing the totality of the code and private memory. The proof makes it possible for the user to verify that the environment is running on trustworthy hardware, that it has been correctly configured, and to obtain the cryptographic measurement of the Tinfoil environment running inside the enclave.

Verifying attestation proofs

Our client verifier is also in charge of verifying the correctness of the attestation proofs generated by the hardware enclave.

Step 5: Do We Have a Match?

Once the client-side verifier has checked the correctness and authenticity of the attestation proof coming from the hardware and the correctness of the Sigstore log entry, there is only one simple check left to ensure the Tinfoil enclave is correctly set up: check that the measurements from the enclave match the measurements in the Sigstore log entry. If it is the case, we know the hardware is correctly running a trusted piece of code, published on the transparency log. This final check is automatically performed by the verifier and, if it fails, will prevent the client from using the enclave.

Understanding Remaining Trust Assumptions

While we've focused on eliminating the need to trust Tinfoil's operations, it's important to acknowledge the remaining trust assumptions in our system.

Trust in the Hardware Manufacturer

Our security model builds upon the confidential computing features implemented by hardware manufacturers like NVIDIA, AMD, and Intel. These manufacturers follow industry standards, undergo security audits, and maintain certification processes for their hardware security features. Ultimately, the security guarantees Tinfoil provides are rooted in the underlying secure hardware enclaves. No system is ever perfectly secure, but these are the strongest foundations for cloud security today. Stay tuned for a detailed look at sophisticated hardware-level threats and how we are hardening our platform against them.

Infrastructure Dependencies

Our transparency pipeline depends on several third-party services:

- GitHub for hosting our open source code and running automated builds

- Sigstore for maintaining the transparency log

- The Go compiler and its dependencies

While these are well-established, security-focused platforms used by millions of developers worldwide, they represent additional links in our trust chain. We mitigate these risks by minimizing external dependencies and keeping the codebase small and simple. Ultimately, our goal is to further reduce trust through reproducible builds, allowing anyone to verify the entire build process independently.

Who Verifies The Verifier?

Like in all systems trying to reduce trust, there is an inherent bootstrapping issue: We need to trust the first link in the chain of trust.4 In our case, this root of trust is the client-side verifier, which is responsible for checking the attestation and build measurements. Thankfully, the verifier is small enough that the code can be directly inspected. For all platforms, we also publish the code of the verifier so a sophisticated client such as an enterprise customer can compile and distribute it themselves.

Conclusion

We believe these remaining trust assumptions represent an acceptable trade-off between security and practicality. Compared to traditional cloud deployments that require implicit trust in the entire stack, we've reduced trust assumption to our Tinfoil environment (which has its root of trust in the hardware manufacturer, e.g., NVIDIA), made it easy to audit our entire code base, and we've gone through great lengths to ensure it is both transparent, trustworthy, and automatically attested on every client connection.

Footnotes

-

As evidenced by the nearly $4.4 billion in fines and penalties assessed for major data breaches, including cases where companies like Meta, Equifax, and T-Mobile had legal obligations to protect user data. Source: CSO Online - Biggest data breach fines and penalties ↩

-

According to a survey by NOYB (None of Your Business) published on Data Protection Day 2024, showing widespread non-compliance with data protection regulations even from the perspective of company insiders. Source: NOYB - Data Protection Day: 74% of insiders see 'relevant violations' at most companies ↩

-

CT logs make all issued TLS certificates public on a distributed ledger, allowing efficient detection of mistakenly or maliciously issued certificates. Source: Certificate Transparency ↩

-

A fundamental challenge in security systems is establishing initial trust — you must trust at least one component to bootstrap a chain of trust. Source: Bootstrapping Trust in Modern Computers ↩

Subscribe for Updates

RSS FeedStay up to date with our latest blog posts and announcements.